High-definition (HD) map construction methods are crucial for providing precise and comprehensive static environmental information, which is essential for autonomous driving systems. While Camera-LiDAR fusion techniques have shown promising results by integrating data from both modalities, existing approaches primarily focus on improving model accuracy, often neglecting the robustness of perception models—a critical aspect for real-world applications. In this paper, we explore strategies to enhance the robustness of multi-modal fusion methods for HD map construction while maintaining high accuracy. We propose three key components: data augmentation, a novel multi-modal fusion module, and a modality dropout training strategy. These components are evaluated on a challenging dataset containing 13 types of multi-sensor corruption. Experimental results demonstrate that our proposed modules significantly enhance the robustness of baseline methods. Furthermore, our approach achieves state-of-the-art performance on the clean validation set of the NuScenes dataset. Our findings provide valuable insights for developing more robust and reliable HD map construction models, advancing their applicability in real-world autonomous driving scenarios.

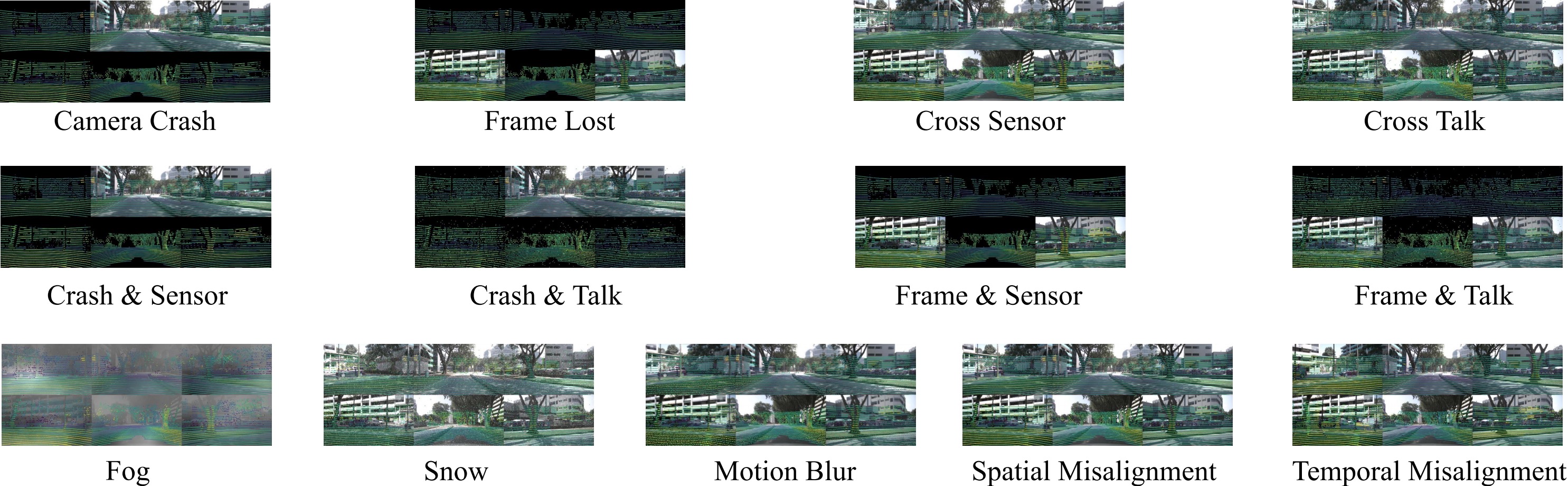

Overview of the Multi-Sensor Corruption dataset. Multi-Sensor Corruption includes 13 types of synthetic camera-LiDAR corruption combinations that perturb both camera and LiDAR inputs, either separately or concurrently.

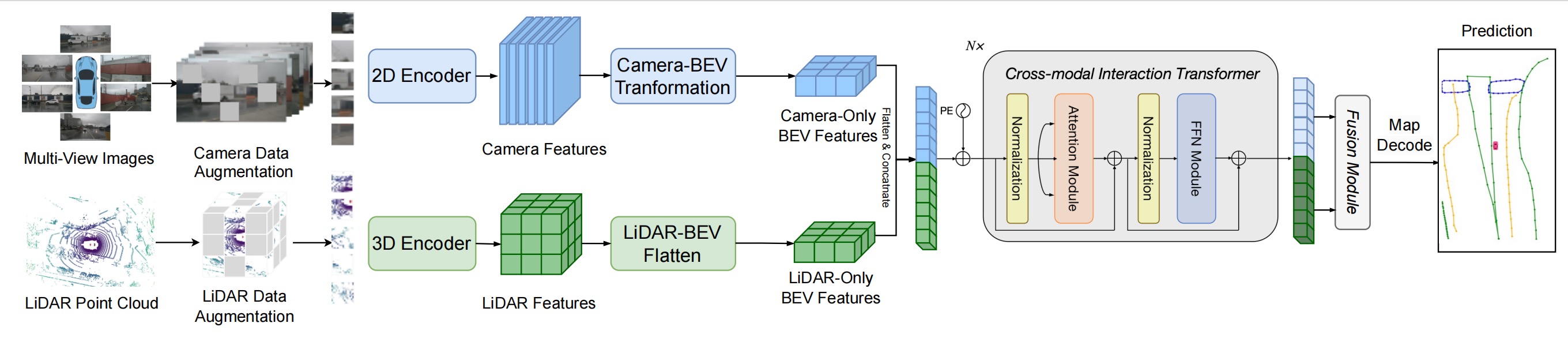

Overview of the RoboMap Framework. The RoboMap framework begins by applying data augmentation to both camera images and LiDAR point clouds. Next, features are efficiently extracted from the multi-modal sensor inputs and transformed into a unified Bird’s-Eye View (BEV) space using view transformation techniques. We then introduce a novel multi-modal BEV fusion module to effectively integrate features from both modalities. Finally, the fused BEV features are passed through a shared decoder and prediction heads to generate high-definition (HD) maps.

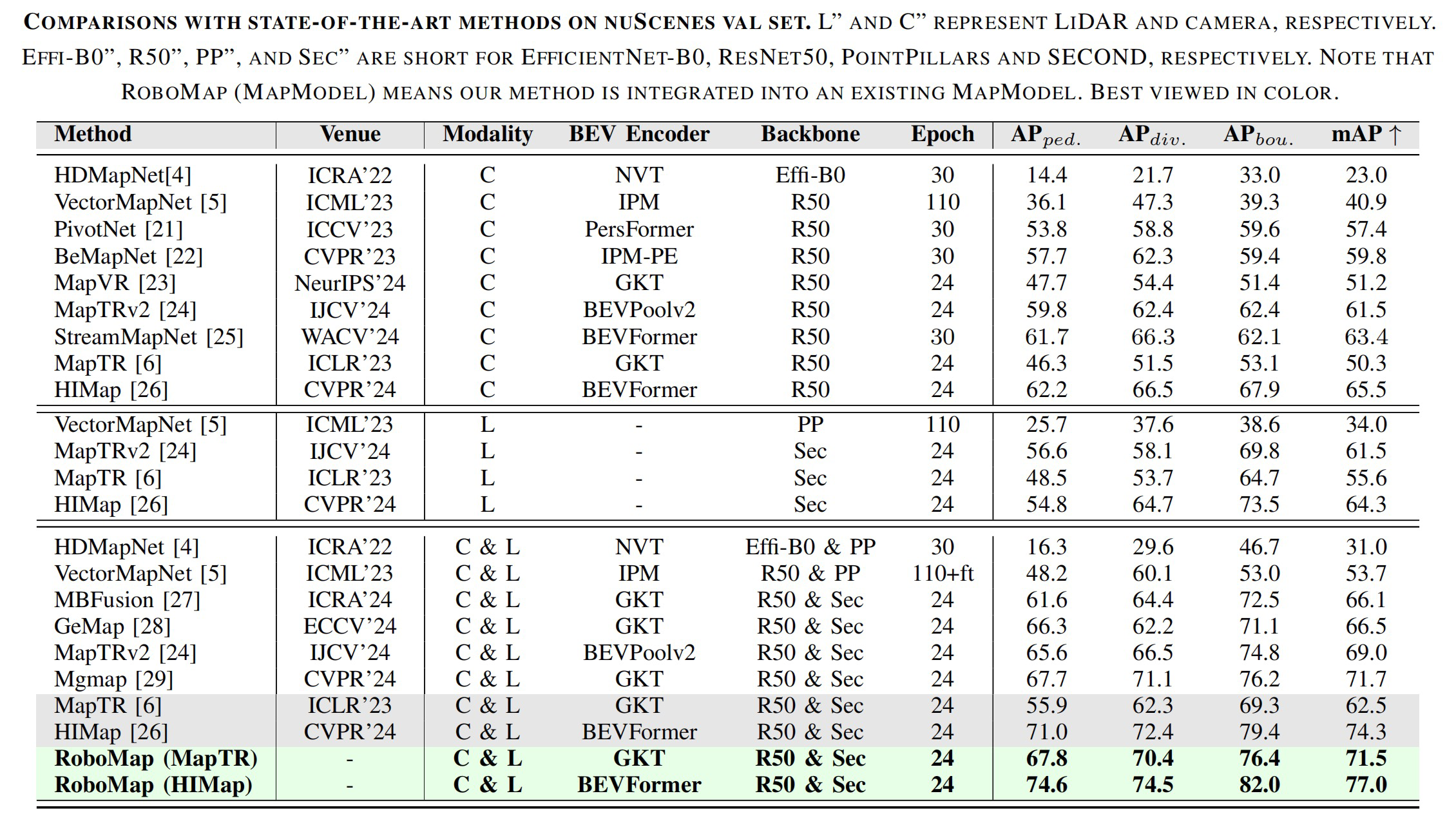

Comparisons with state-of-the-art methods on NuScenes val set. L and C represent LiDAR and camera, respectively. Effi-B0, R50, PP, and Sec are short for EfficientNet-B0, ResNet50, PointPillars, and SECOND, respectively. Note that RoboMap (MapModel) means our method is integrated into an existing MapModel.

The scores RSc and mRS for the original MapTR model and its variants are presented. RSc uses MAP as the metric.

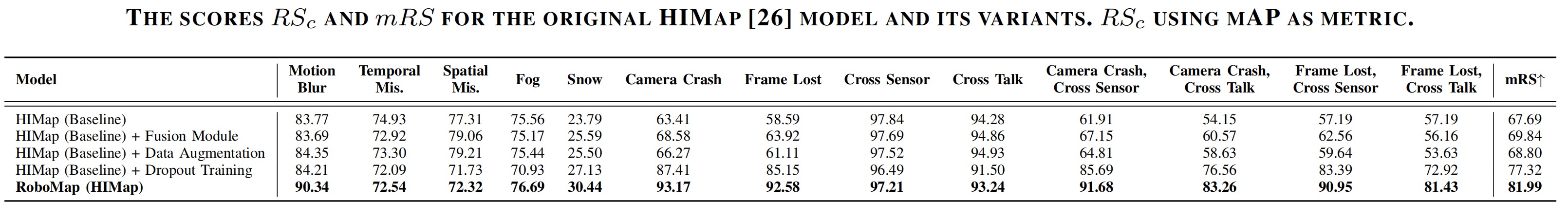

The scores RSc and mRS for the original HiMap model and its variants are presented. RSc uses MAP as the metric.

Analyze the impact of different modules on the HD map construction task using clean data.

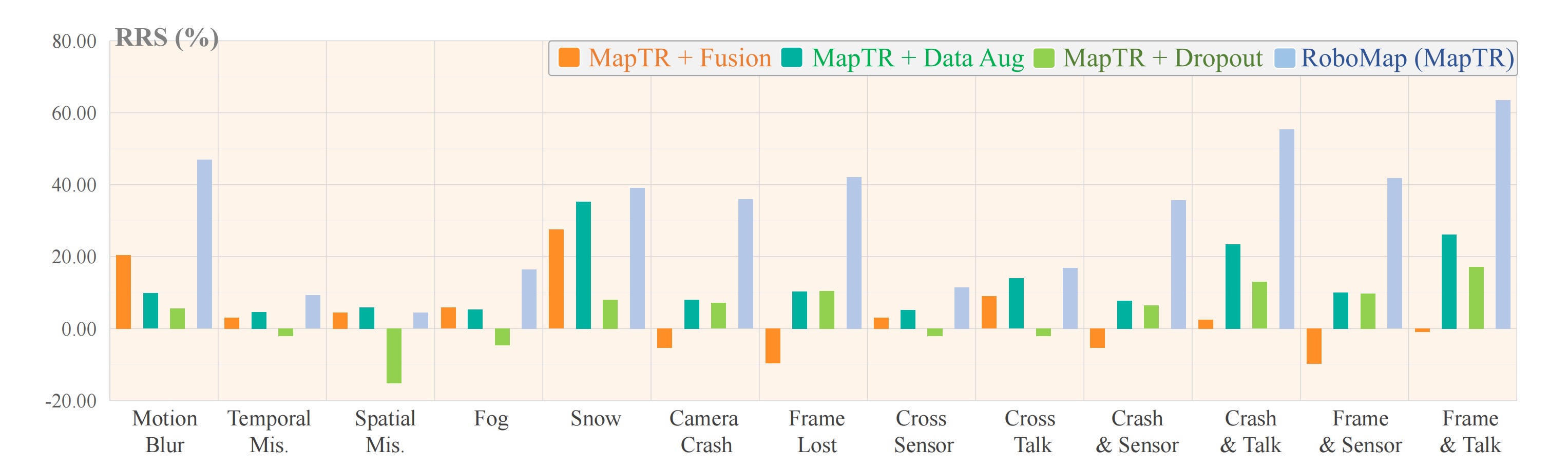

Relative robustness visualization. Relative Resilience Score (RRS) computed with mAP using original MapTR as baseline.

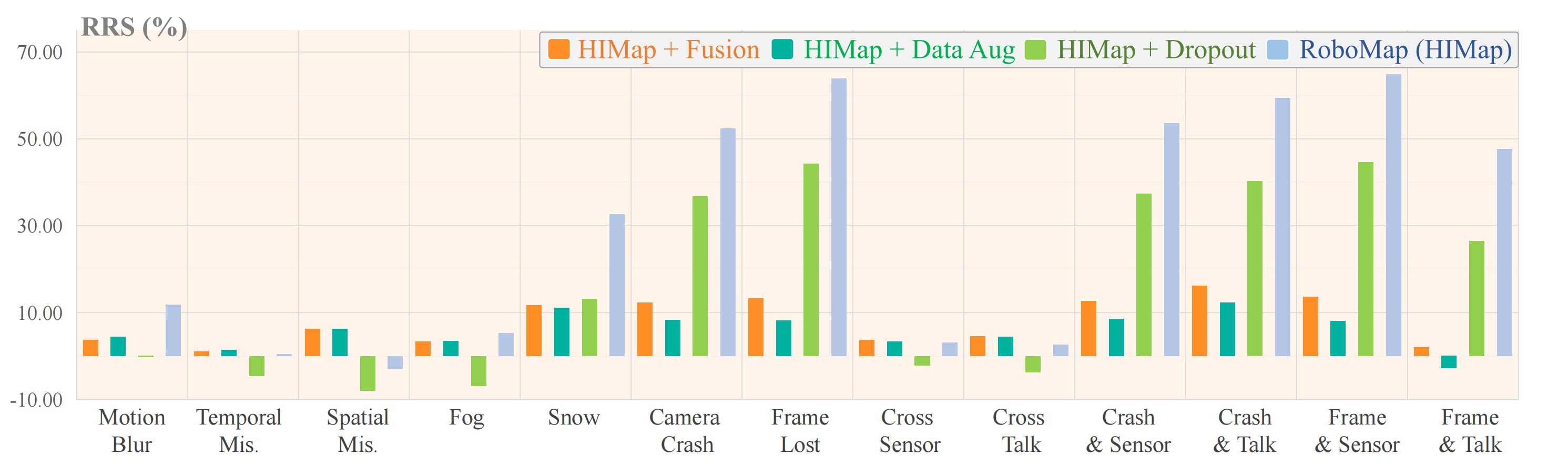

Relative robustness visualization. Relative Resilience Score (RRS) computed with mAP using original HIMap as baseline.

The datasets and benchmarks are under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License